Every year, data collection methods and hardware get more advanced and more accurate. Agencies and organizations alike race to deploy them.

Higher resolution cameras. Longer flight endurance from drones. Satellites that can spot a license plate from orbit.

Each upgrade promises clearer pictures and better and faster insights. But software isn’t exactly keeping pace.

Moreover, just because we can collect that much data doesn’t mean it’s the correct data.

Collectively, we’re rarely asking the important question: What decision(s) will this data actually inform?

Without a clear answer to that question, advanced sensors do something counterintuitive. They make your data problem exponentially worse.

Fortunately, there is a solution. It involves software that can keep up with hardware changes.

The global economy already loses $3.1 trillion annually to data quality problems. And right now, we're repeating a mistake the intelligence community has been making for decades, just with more pixels and better processors.

In the military, human intelligence collectors work as sensors themselves.

They, in turn, build networks of people designed to collect information. They develop trust with sources. They ask questions, send people out to collect atmospherics, track movement patterns, and verify locations.

The work carries real risk. Time. Trust. Human safety.

Then those operators compile reams of paper reports, transmit them up the chain, and wait to see if they made a difference.

Too often, sadly, they don't.

Or if they do, the HUMINT collectors never hear about it.

The quality of any given sensor isn’t the question we’re talking about here.

The problem lies in mission design. Tasking often comes down vague and broad:

• Build out the human infrastructure.

• Develop an operation on a terrorist target.

• Find a connection to high value.

If nothing else, those phrases sound incredibly important. But what’s missing is clarity of purpose.

What you don’t see is a defined mission outcome tied to the information being gathered. Without clear outcomes, the system will only be flooded with data that no one knows how to use.

Any issue that is flawed at the foundation (i.e. capturing a bunch of data you didn’t need) gets exponentially worse when you start to scale collection activities. Then you’re left with huge costs with questionable impact and plenty of expensive reports that are filed away to be forgotten about.

What's missing from the equation is an effects-driven foundation.

Whether the sensor is a person talking to a local source or a drone orbiting a target, the same principle applies: collection should be outcome-driven, not curiosity-driven.

Before any sensor deploys, human or autonomous, three questions need answers.

Not "provide situational awareness." Try "validate whether material is moving across the border at night" or "confirm construction timeline for adversarial radar installation."

Collection should tie directly to an objective. Detect. Verify. Validate. Track. Each verb should connect to an action someone will take with the information.

No sensor operates in isolation. The footage needs to combine with signals intelligence, human reports, and historical data. Nearly 67% of organizations struggle to integrate new sensor data with existing information sources, leading to data silos and missed strategic opportunities. If you can't describe how it stitches together, you're creating another silo.

This is where software enters the picture.

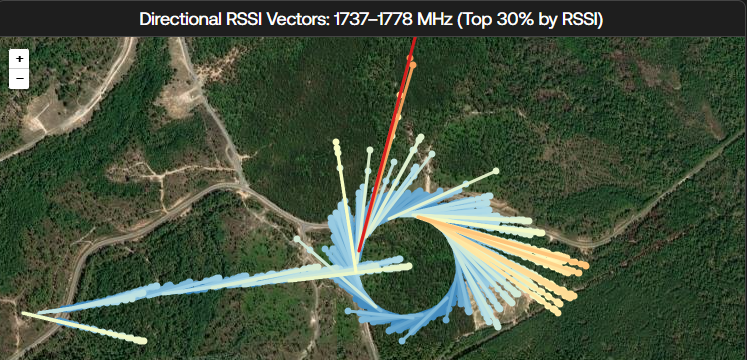

Platforms like IBIS™ change the equation. Natural language queries force outcome thinking. Instead of, "Give me all the drone data collected on Tuesday," you ask, "Show material movement patterns in NAI Seven over the past 96 hours."

Knowledge graphs can pull together data from these autonomous platforms together with human intelligence, creating mission context instead of a slew of disconnected feeds.

Our AI governance ensures collection activities serve a strategy that’s more than “fill up all the storage possible.”

AI governance creates repeatability and re-usability between deployments of IBIS™ or within the same software being used for a different mission.

Think of governance as the abstract stitching that makes multiple uses and use cases for AI possible as you switch what you're doing and the data you're doing it with.

In a recent airborne autonomous systems project, what once required days of data wrangling became queries answered in seconds. Operators could ask mission-critical questions in plain English and receive verified, visualized answers drawn from multiple intelligence sources.

Collection shifted from generating data to answering questions.

Before you approve the next sensor purchase, the next camera upgrade, or the next OSINT data contract, ask one question:

"What question am I looking to answer?”

If you can't articulate the specific decision this sensor will inform, you're about to make your data problem worse. If you can articulate it, you're ready to design a collection that actually serves mission success.

From there, you pair better hardware with a clear objective and process any data using a platform ready to parse through huge amounts of information quickly and accurately. Teams get the answers they’re looking for.

Organizations that get this right turn information overload into operational advantage. Those that don't?

Well, you’re going to pay the price—literally—to continue drowning in data.

If you’d like to see how other organizations are building processes that synthesize both hardware and software to complete their objectives, check out some of our customer stories.

Our 8-week Pilot Partnership Program lets you test IBIS™ with up to 2TB of your own data across three sources.

See how chat-based queries + mission-derived context + AI governance eliminates the tradeoff between speed and accuracy with IBIS™.

Schedule a demoSend us a message and we’ll get in touch shortly.